The people who operate robots are often the ones who really make magic happen. This includes drone pilots for uncrewed aerial vehicles, remote safety drivers for self-driving cars, remote drivers for self-driving forklifts, and puppeteers for interactive entertainment robots. There is a whole new job category of “robot wrangler” that is becoming increasingly popular as the number of robot deployments grows. In the past, it was shameful to admit that there were humans in-the-loop of supposedly “fully autonomous” robot systems. We are now making the transition to openly hiring for those job roles and no longer hiding them out of sight during robot demonstrations.

In other words, we’re finally recognizing the importance of robot operators. I think it’s high time that we celebrate them and design better interfaces for them. If you’re coming from the consumer tech industry, you’ll likely be surprised by how far behind robotic interfaces have fallen. There is so much room for improvement in robot operator user experience, ergonomics, and performance. Right now, it takes a very special kind of talent to effectively pilot robots.

What if we made operating these robots more accessible, less stressful, and easier to do well? That is a future I’m excited about. I’ve been lucky to get to work on this challenge in robot manipulation, ocean robots (e.g., adding stereoscopic depth perception to ROV pilot interfaces to improve their work performance), and flying robots (e.g., unmanned aerial vehicles made by teams at Wing and Zipline). There is nothing quite like seeing what a professional robot operator can do when you can put good interfaces in their hands.

Some of my publications in this area include:

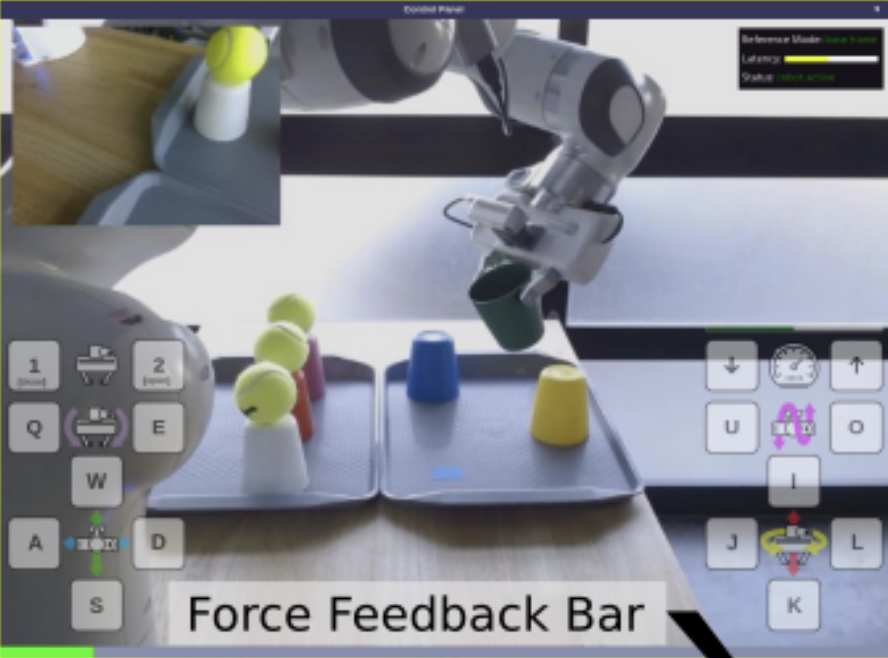

Moortgat-Pick, A., So, P., Sack, M., Cunningham, E., Hughes, B., Adaamczyk, A., Sarabakha, A., Takayama, L., & Haddadin, S. (2022). A-RIFT: Visual Substitution of Force Feedback for a Zero-Cost Interface in Telemanipulation, Proceedings of IROS 2022.

Elor, A., Thang, T., Hughes, B. P., Crosby, A., Phung, A., Gonzalez, E., Katija, K., Haddock, S. H. D., Martin, E. J., and Takayama, L. (2021). Catching jellies in immersive virtual reality: A comparative teleoperation study of ROVs in underwater capture tasks. ACM VRST 2021. [26% acceptance rate – winner of the best paper award]

Hughes, B. P., Weatherwax, K., Moxley-Fuentes, M., Kaur, G., Davidenko, N., & Takayama, L. (2020). The influence of gaming frequency and viewing perspective on a remote robot operation task. Journal of Vision, 20 (11). doi:10.1167/jov.20.11.1706

Leeper, A., Hsiao, K., Ciocarlie, M., Takayama, L., & Gossow, D. (2012). Strategies for human-in-the-loop robotic grasping. Proceedings of Human-Robot Interaction: HRI 2012, Boston, MA, 1-8. [25% acceptance rate]

[ PDF ]